为什么选用fastgpt

支持RAG,支持本地知识库训练过程和结果的可视化

version: '3.3'

services:

# db

pg:

#image: pgvector/pgvector:0.7.0-pg15 # docker hub

image: registry.cn-hangzhou.aliyuncs.com/fastgpt/pgvector:v0.7.0 # 阿里云

container_name: pg

restart: always

ports: # 生产环境建议不要暴露

- 5432:5432

networks:

- fastgpt

environment:

# 这里的配置只有首次运行生效。修改后,重启镜像是不会生效的。需要把持久化数据删除再重启,才有效果

- POSTGRES_USER=username

- POSTGRES_PASSWORD=password

- POSTGRES_DB=postgres

volumes:

- ./pg/data:/var/lib/postgresql/data

mongo:

#image: mongo:5.0.18 # dockerhub

image: registry.cn-hangzhou.aliyuncs.com/fastgpt/mongo:5.0.18 # 阿里云

# image: mongo:4.4.29 # cpu不支持AVX时候使用

container_name: mongo

restart: always

ports:

- 27017:27017

networks:

- fastgpt

command: mongod --keyFile /data/mongodb.key --replSet rs0

environment:

- MONGO_INITDB_ROOT_USERNAME=myusername

- MONGO_INITDB_ROOT_PASSWORD=mypassword

volumes:

- ./mongo/data:/data/db

entrypoint:

- bash

- -c

- |

openssl rand -base64 128 > /data/mongodb.key

chmod 400 /data/mongodb.key

chown 999:999 /data/mongodb.key

echo 'const isInited = rs.status().ok === 1

if(!isInited){

rs.initiate({

_id: "rs0",

members: [

{ _id: 0, host: "mongo:27017" }

]

})

}' > /data/initReplicaSet.js

# 启动MongoDB服务

exec docker-entrypoint.sh "$$@" &

# 等待MongoDB服务启动

until mongo -u myusername -p mypassword --authenticationDatabase admin --eval "print('waited for connection')" > /dev/null 2>&1; do

echo "Waiting for MongoDB to start..."

sleep 2

done

# 执行初始化副本集的脚本

mongo -u myusername -p mypassword --authenticationDatabase admin /data/initReplicaSet.js

# 等待docker-entrypoint.sh脚本执行的MongoDB服务进程

wait $$!

# fastgpt

sandbox:

container_name: sandbox

#image: ghcr.io/labring/fastgpt-sandbox:v4.8.21 # git

image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt-sandbox:v4.8.21 # 阿里云

networks:

- fastgpt

restart: always

fastgpt:

container_name: fastgpt

#image: ghcr.io/labring/fastgpt:v4.8.21 # git

image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt:v4.8.21 # 阿里云

ports:

- 3000:3000

networks:

- fastgpt

depends_on:

- mongo

- pg

- sandbox

restart: always

environment:

# 前端访问地址: http://localhost:3000

- FE_DOMAIN=http://10.0.0.110:3000;http://10.20.0.33:3000

# root 密码,用户名为: root。如果需要修改 root 密码,直接修改这个环境变量,并重启即可。

- DEFAULT_ROOT_PSW=123456

# AI模型的API地址哦。务必加 /v1。这里默认填写了OneApi的访问地址。

- OPENAI_BASE_URL=http://oneapi:3000/v1

# AI模型的API Key。(这里默认填写了OneAPI的快速默认key,测试通后,务必及时修改)

- CHAT_API_KEY=sk-123456

# 数据库最大连接数

- DB_MAX_LINK=30

# 登录凭证密钥

- TOKEN_KEY=any

# root的密钥,常用于升级时候的初始化请求

- ROOT_KEY=root_key

# 文件阅读加密

- FILE_TOKEN_KEY=filetoken

# MongoDB 连接参数. 用户名myusername,密码mypassword。

- MONGODB_URI=mongodb://myusername:mypassword@mongo:27017/fastgpt?authSource=admin

# pg 连接参数

- PG_URL=postgresql://username:password@pg:5432/postgres

# sandbox 地址

- SANDBOX_URL=http://sandbox:3000

# 日志等级: debug, info, warn, error

- LOG_LEVEL=info

- STORE_LOG_LEVEL=warn

# 工作流最大运行次数

- WORKFLOW_MAX_RUN_TIMES=1000

# 批量执行节点,最大输入长度

- WORKFLOW_MAX_LOOP_TIMES=100

# 自定义跨域,不配置时,默认都允许跨域(多个域名通过逗号分割)

- ALLOWED_ORIGINS=

# 是否开启IP限制,默认不开启

- USE_IP_LIMIT=false

volumes:

- ./config.json:/app/data/config.json

# oneapi

oneapi:

container_name: oneapi

image: ghcr.io/songquanpeng/one-api:v0.6.7

#image: registry.cn-hangzhou.aliyuncs.com/fastgpt/one-api:v0.6.7 # 阿里云

ports:

- 3001:3000

networks:

- fastgpt

restart: always

environment:

# mysql 连接参数

- SQL_DSN=root:123456@tcp(10.0.0.110:3306)/oneapi

# 登录凭证加密密钥

- SESSION_SECRET=123456

# 内存缓存

- MEMORY_CACHE_ENABLED=true

# 启动聚合更新,减少数据交互频率

- BATCH_UPDATE_ENABLED=true

# 聚合更新时长

- BATCH_UPDATE_INTERVAL=10

# 初始化的 root 密钥(建议部署完后更改,否则容易泄露)

- INITIAL_ROOT_TOKEN=123456

volumes:

- ./oneapi:/data

networks:

fastgpt:

为什么选用anythingllm

可以邀请用户使用,并且比openwebui速度快那么一丢丢,可以后台管理看到用户的使用情况,方便后期升级优化

为什么选用ollama

方便快捷的调用各种AI

version: '3.8'

services:

deepseek:

container_name: deepseek

image: ollama/ollama:0.5.8

volumes:

- /usr/share/ollama/.ollama:/root/.ollama

ports:

- 11434:11434

restart: unless-stopped

environment:

NVIDIA_VISIBLE_DEVICES: all

deploy:

resources:

reservations:

devices:

- driver: "nvidia"

count: "all"

capabilities: ["gpu"]

anythingllm:

container_name: anythingllm

image: mintplexlabs/anythingllm

ports:

- "3002:3001"

cap_add:

- SYS_ADMIN

volumes:

- ./anythingllm/.env:/app/server/.env

- ./anythingllm/storage:/app/server/storage

environment:

STORAGE_DIR: /app/server/storage

OLLAMA_BASE_URL: http://deepseek:11434

NVIDIA_VISIBLE_DEVICES: all

deploy:

resources:

reservations:

devices:

- driver: "nvidia"

count: "all"

capabilities: ["gpu"]

anythingllm/.env

# Auto-dump ENV from system call on 03:42:00 GMT+0000 (Coordinated Universal Time)

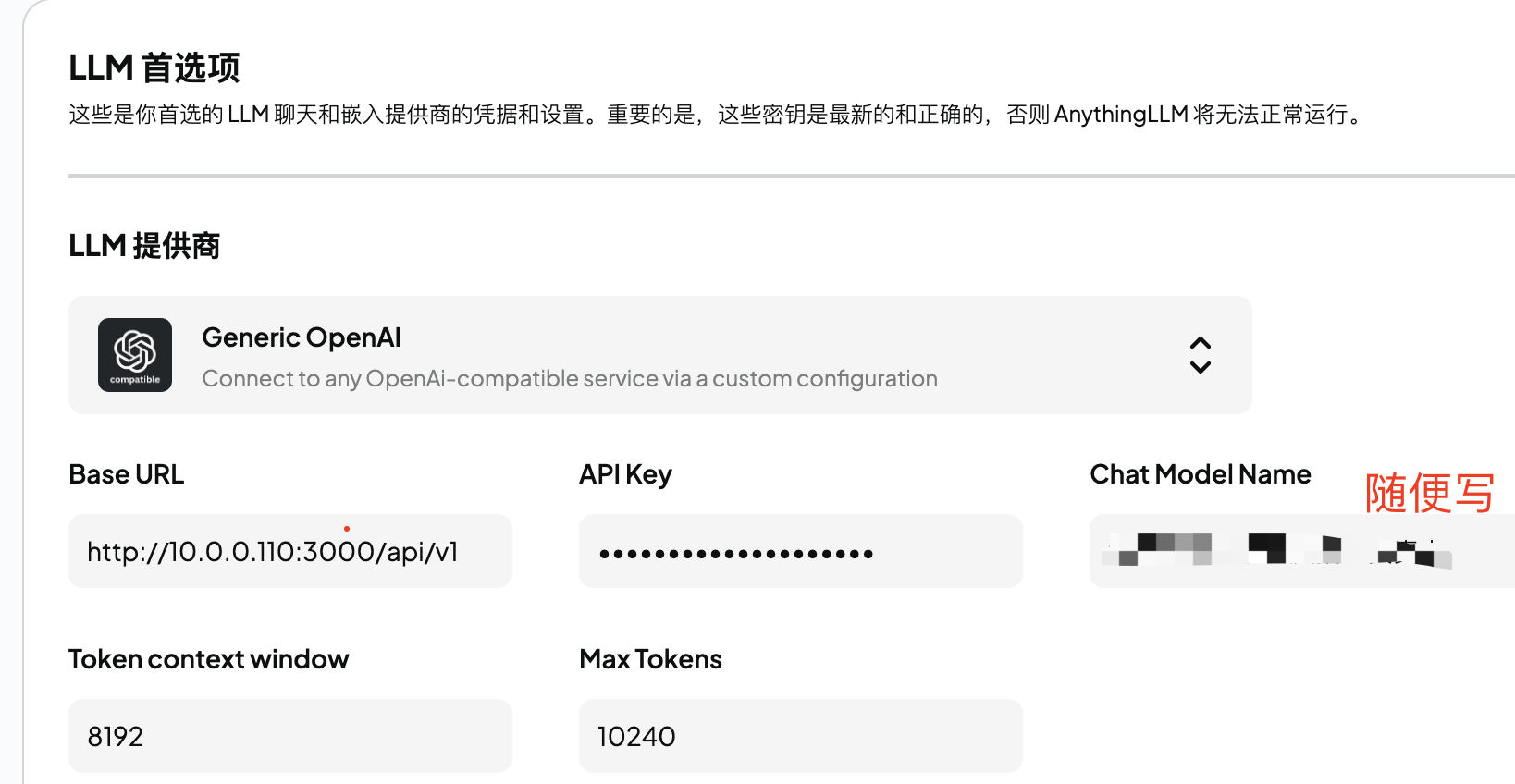

LLM_PROVIDER='generic-openai'

EMBEDDING_MODEL_PREF='bge-m3:latest'

LOCAL_AI_BASE_PATH='http://10.0.0.110:3000/api/v1'

LOCAL_AI_MODEL_TOKEN_LIMIT='8192'

LOCAL_AI_API_KEY='fastgpt-123456'

OLLAMA_BASE_PATH='http://deepseek:11434'

OLLAMA_MODEL_PREF='deepseek-r1_32b_max:latest'

OLLAMA_MODEL_TOKEN_LIMIT='131072'

OLLAMA_PERFORMANCE_MODE='maximum'

OLLAMA_KEEP_ALIVE_TIMEOUT='-1'

GENERIC_OPEN_AI_BASE_PATH='http://10.0.0.110:3000/api/v1'

GENERIC_OPEN_AI_MODEL_PREF='太原理工大学重庆招生负责人'

GENERIC_OPEN_AI_MODEL_TOKEN_LIMIT='8192'

GENERIC_OPEN_AI_API_KEY='fastgpt-123456'

GENERIC_OPEN_AI_MAX_TOKENS='10240'

EMBEDDING_ENGINE='native'

EMBEDDING_BASE_PATH='http://deepseek:11434'

EMBEDDING_MODEL_MAX_CHUNK_LENGTH='8192'

VECTOR_DB='lancedb'

MILVUS_ADDRESS='http://localhost:19530'

MILVUS_USERNAME='admin'

MILVUS_PASSWORD='123456'

JWT_SECRET='my-random-string-for-seeding'

STORAGE_DIR='/app/server/storage'

SERVER_PORT='3001'

SIG_KEY='passphrase'

SIG_SALT='salt'

fastgpt配置ollama模型

首先在oneapi上配置渠道

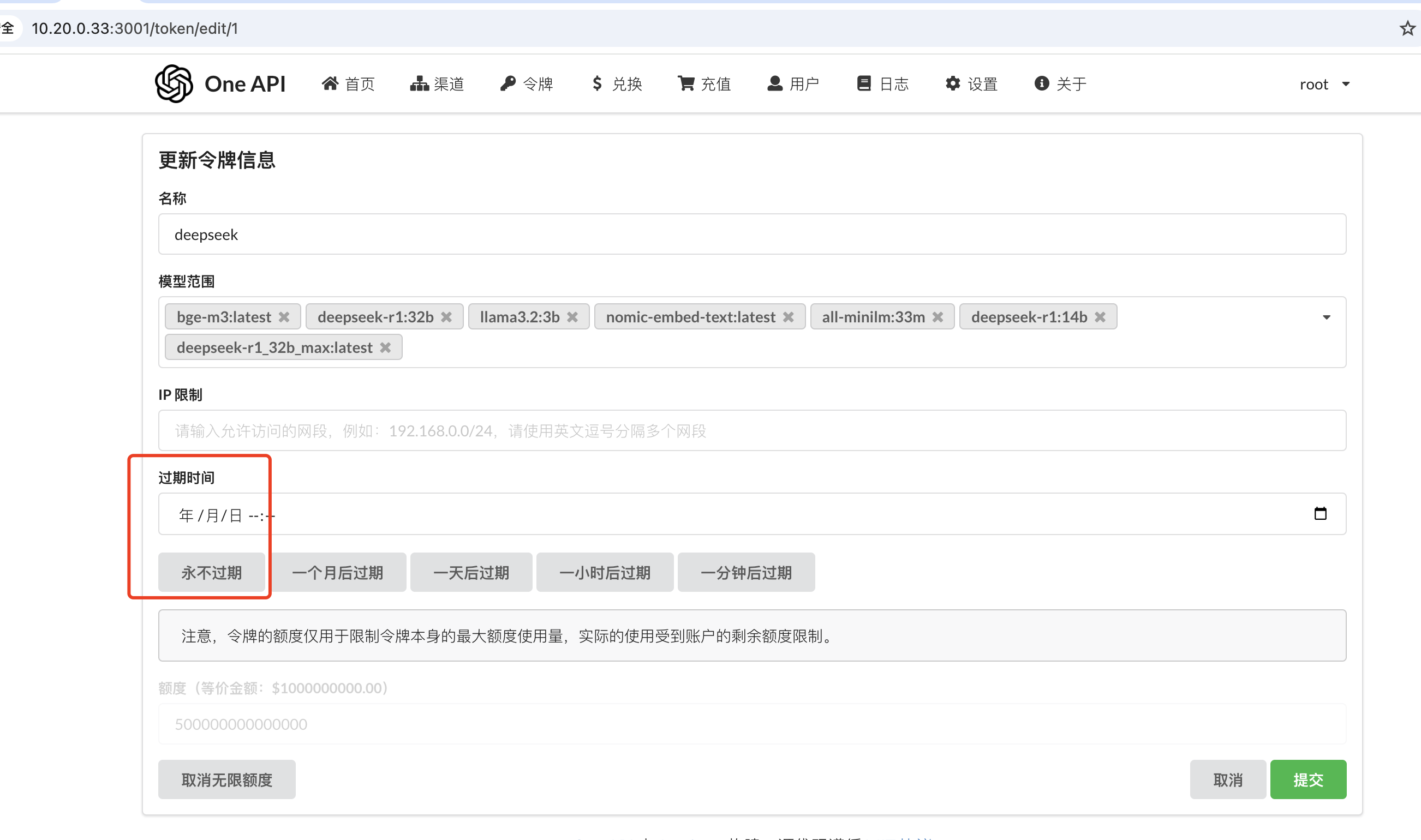

第二步在oneapi上配置令牌:选择永不过期

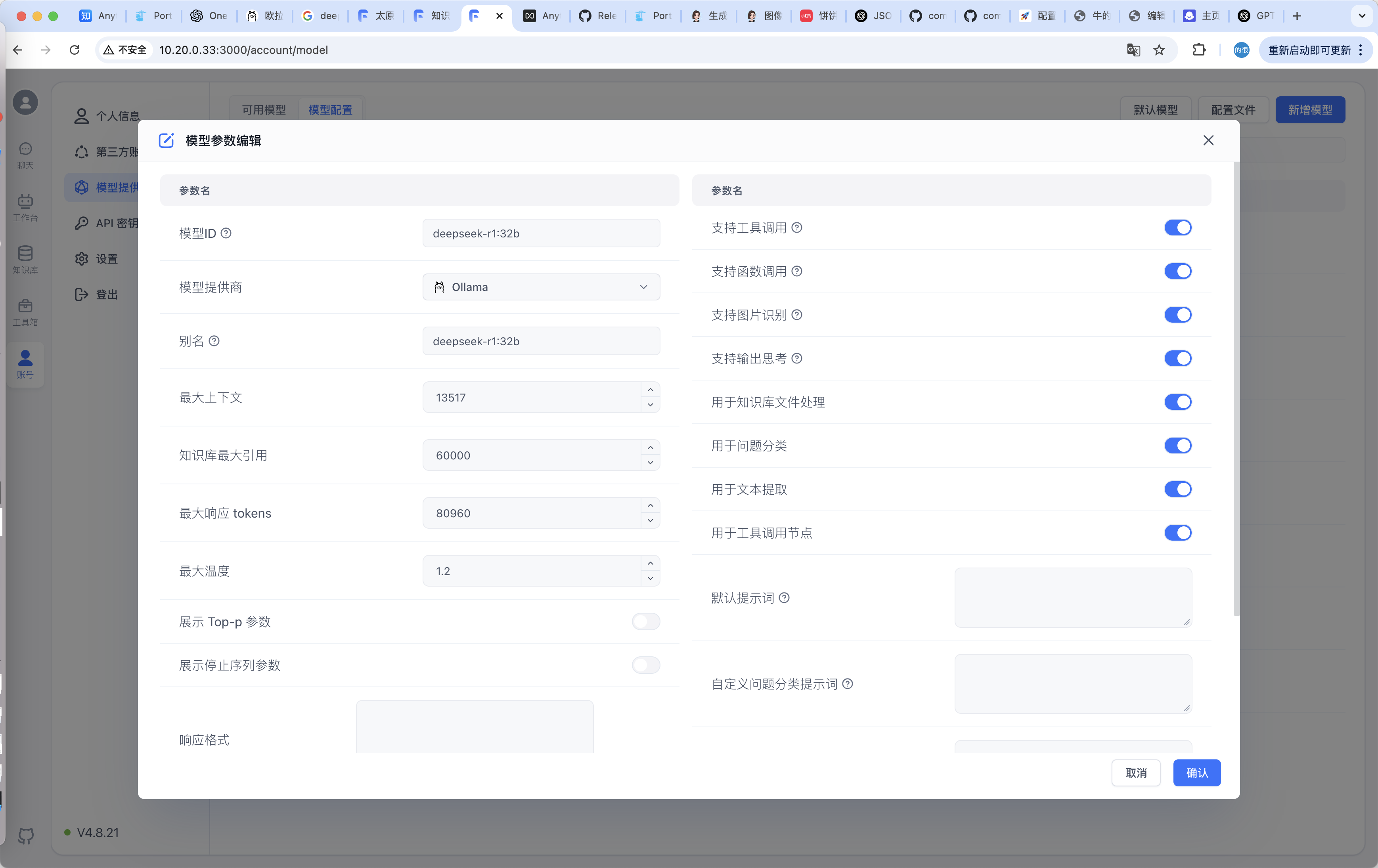

最后在fastgpt上配置ollama

如何在anythingllm配置fastgpt的api